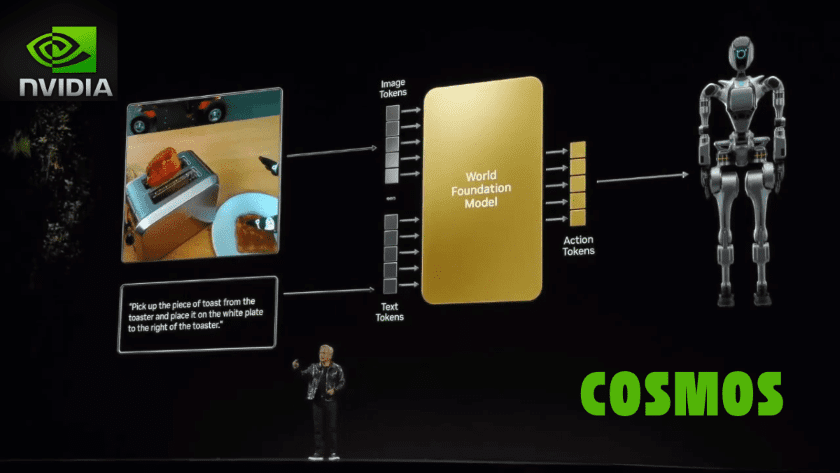

Nvidia just pulled a big lever in robotics. At SIGGRAPH 2025, the company unveiled Cosmos, a bundled set of world models — internal, predictive models of how the world works — simulation libraries, and compute infrastructure designed to move “physical AI” from research demos into practical developer toolchains. The platform combines vision-language reasoning models, fast synthetic-data generators, Omniverse reconstruction tools, and new server/cloud offerings so teams can build, test, and deploy robots faster and more safely.

At the center is Cosmos Reason, a 7-billion-parameter vision-language model designed to give robots memory, physics awareness, and planning ability — in short, a model that helps an embodied agent decide what to do next instead of only reacting. Alongside it, Cosmos Transfer-2 and a distilled Transfer variant turn 3D scenes into photorealistic images, video, and labeled datasets to train perception systems at scale. Nvidia also released neural reconstruction libraries for building accurate 3D worlds from sensor data and updated Omniverse tools to run large, physics-accurate simulations.

Why this matters: Two of robotics’ biggest bottlenecks are data and risk. Collecting diverse, labeled real-world footage is slow, costly, and sometimes dangerous; testing unproven behaviors on physical robots risks damage and injury. Cosmos addresses both by letting engineers generate vast, varied synthetic datasets and rehearse behaviors in realistic simulations before trying them on hardware. That shortens development cycles, reduces wear on expensive robots, and makes it practical to train for rare events (bad weather, unusual obstacles) that are hard to capture in the real world.

How teams will use it, practically: An engineer captures a factory bay with cameras and LiDAR, uses Omniverse reconstruction to create a 3D scene, runs Transfer-2 to produce thousands of labeled images and videos, and trains Cosmos Reason to predict multi-step actions. Simulations then test edge cases — fallen boxes, partial lighting, sensor noise — and policies that succeed in sim are fine-tuned on a much smaller real dataset. That workflow turns months of hard, hazardous trials into iterative, repeatable cycles. The platform also plugs into existing ecosystems: Nvidia is integrating rendering and reconstruction with popular open simulators such as CARLA, broadening reuse for autonomous driving and research.

Immediate use cases are clear and broad. Warehouses can train pick-and-place and navigation systems across many shelf layouts; delivery robots can learn hundreds of doorstep configurations; drones and inspection bots can rehearse tight or dangerous approaches; and healthcare or assistive robots can practise human-adjacent tasks with lower risk. For self-driving research, synthetic scenes accelerate perception models for rare but critical scenarios like night rain, road debris, and complex intersections. Several labs and companies already test Omniverse and Cosmos tools to accelerate prototyping and reduce hardware costs.

Nvidia didn’t just release software: it paired the models with hardware and cloud options. New RTX Pro Blackwell servers and DGX Cloud integration aim to let organizations run these compute-heavy pipelines at scale, from local development to large cloud clusters. That combination — models + sim + compute — matters because realistic simulation and world-scale training are resource-intensive; having an optimized stack reduces friction for teams that need to iterate quickly.

The upside is big: faster innovation, safer rollouts, and wider access to advanced robotics workflows. Startups and smaller labs can prototype more cheaply; established firms can expand automation into harder tasks; researchers can test hypotheses on richer simulated data. We may see robots that generalize better to new environments and handle rare events more gracefully — which pushes robotics closer to everyday use in logistics, healthcare, construction, and beyond.

But the platform does not erase core challenges. First, the simulation-to-real gap remains: even the best renderings and physics approximations miss subtle real-world effects like sensor calibration quirks, material wear, and unpredictable human behavior. Models trained mainly on synthetic data still need careful real-world fine-tuning and robust validation. Second, bias and coverage in synthetic scenes matter: if virtual worlds over-represent certain environments or behaviors, robots will inherit those blind spots. Third, cost and concentration are real concerns: high-end compute may keep the most powerful pipelines in the hands of well-funded labs unless access and open tooling broaden. Finally, safety, regulation, and liability frameworks need urgent work as more capable robots leave labs for public spaces.

There are also governance and ethical angles to watch. Who decides which scenarios get simulated? Who audits a robot’s behavior before it operates near people? Nvidia and the broader community will need transparent benchmarks, third-party safety audits, and shared datasets to ensure systems are tested against diverse, realistic challenges. Open evaluation suites and community governance could help prevent narrow, commercially driven training that overlooks equity and safety.

In short, Cosmos packages the missing plumbing for real-world AI: models that reason about physical space, tools to synthesize the training data those models need, and the compute to run both simulation and learning at scale. That plumbing lowers practical barriers and could speed robotics into wider use — but it also raises the stakes for careful validation, broader access, and stronger safety rules.

When teams combine Cosmos with rigorous real-world testing, transparent evaluation, and responsible deployment, the result could be smarter, safer robots that help in factories, on streets, and in homes. But skipping those steps risks creating brittle systems that look good in simulation only to fail when the stakes are highest. For anyone building the next generation of machines, Nvidia’s Cosmos is a powerful new toolbox — and a clear reminder that engineering progress must go hand-in-hand with ethics, oversight, and public accountability.

Featured image via screengrab