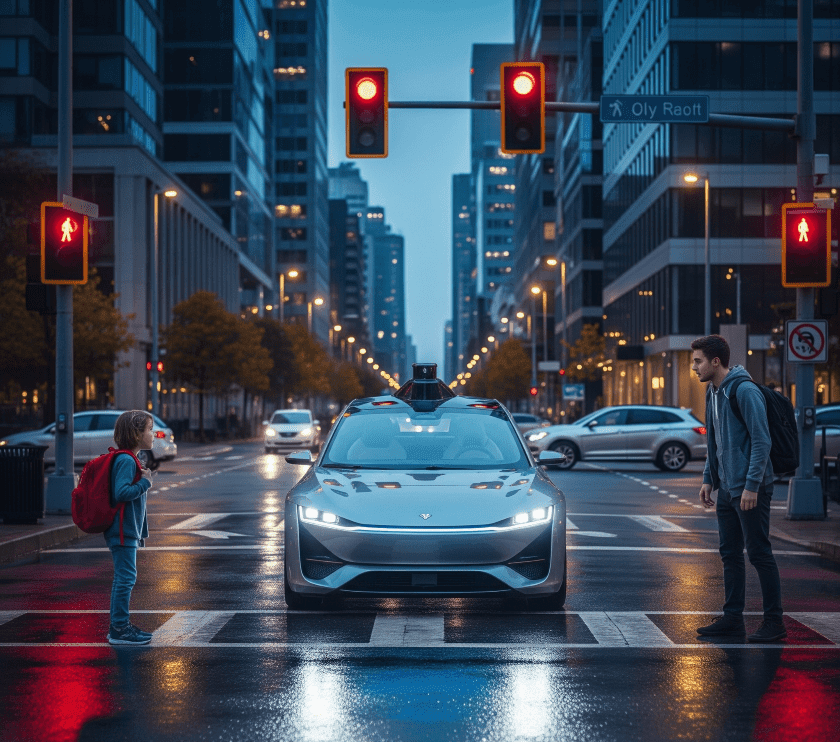

Imagine a self-driving car hurtling down the road. A child runs into the street. The brakes fail. The car must choose, swerve and kill the adult driver, or stay and kill the child. Who does the machine save?

That classic ethical puzzle, the modern trolley problem, suddenly feels real when powerful AI runs our cars, drones, hospitals and power grids. Today’s question is no longer only a thought experiment. It is practical and urgent. What rules do we give machines for life-and-death choices? And if we ever build an Artificial General Intelligence, AGI, that thinks for itself, could it decide humans are the problem?

Good people disagree. Some say always save the baby. Others say save the person with more dependants, or the one likely to live longer, or the one who will do the most good for the most people. MIT’s Moral Machine project shows how culture and context shape choices, and different societies pick differently. There is no single, neat answer.

Experts warn we must plan now. Professor Nick Bostrom wrote that superintelligence could be “the most important and most daunting challenge humanity has ever faced.” If a machine becomes far smarter than us, it could design better machines and rapidly leave human intelligence behind. That is why researchers push serious safety work today.

Elon Musk, a vocal AI critic, has warned that AI is “one of the biggest threats to humanity.” He has given a number, estimating a 10 to 20 percent chance that advanced AI could “go bad” if unchecked. Musk says we must regulate and slow development until safety is clearer.

Sam Altman, CEO of OpenAI, has also sounded alarms. He said a misaligned AGI could cause grievous harm and that society must work to make AI a “big net positive.” OpenAI and other firms now fund alignment research to teach AI systems human values, but this work is hard and uncertain.

What are the real choices? First, build machines that explain decisions. If an autonomous car harms someone, we must know why. Second, design clear safety rules that state who the machine should protect and why. Third, test systems in controlled settings and require independent safety checks before public use. Fourth, create laws and oversight so companies cannot rush dangerous systems into the market. Experts like Stuart Russell argue that AI must be built to learn human values and to ask for help when unsure.

There are hard trade-offs. If an AI must choose between saving one life and many, the result may be cold math. If we forbid machines from making such choices, we may lose benefits, such as safer roads, faster medical triage, and better disaster response. If we allow machines full control, we risk errors and misuse. That is why many call for rules now, not later.

Could an AGI want the end of humanity? We do not know. Thought leaders warn that misaligned goals plus superintelligence could be catastrophic. The hope is this: careful design, global rules and transparency can steer AI to help people, not replace them. The risk is real, but so is the work under way to reduce it.

What should you, as a reader, take away? First, the trolley problem is not just philosophy, it is policy. Second, we need public debate about who writes the rules for life-and-death machines. Third, pressure your leaders for safety checks, clear laws and independent audits before AI runs things that matter. Finally, remember this: technology gives power. Power without rules invites danger.

If humanity wants the gains of smarter machines while avoiding disaster, we must ask the hard questions today. Who decides which life matters? Who watches the watchers? The answers will shape our future, and not just for tech experts, but for every family on the road, in hospitals and at home.

Featured image generated on Gemini